>> 10 new open source projects and developments, worth knowing about

-

TreeSheets

This has made me feel a complete idiot that I have never heard of TreeSheets!

The ultimate replacement for spreadsheets, mind mappers, outliners, PIMs, text editors and small databases.

Suitable for any kind of data organization, such as Todo lists, calendars, project management, brainstorming, organizing ideas, planning, requirements gathering, presentation of information, etc.

It’s like a spreadsheet, immediately familiar, but much more suitable for complex data because it’s hierarchical.

It’s like a mind mapper, but more organized and compact.

It’s like an outliner, but in more than one dimension.

It’s like a text editor, but with structure. -

Flatpak - The future of application distribution

Flatpak has been created to be an open source “Container” / “Package manager” solution, making it easy for developers to release a cross platform version of software.

Flatpak is the new framework for desktop applications on Linux

Distributing applications on Linux is a pain: different distributions in multiple versions, each with their own versions of libraries and packaging formats. Flatpak is here to change all that. It allows the same app to be installed on different Linux distributions, including different versions. And it has been designed from the ground up with security in mind, so that apps are isolated from each other and from the host system.

The days of chasing multiple Linux distributions are over. Standalone apps for Linux are here!

-

pass

the standard unix password manager

Password management should be simple and follow Unix philosophy. With pass, each password lives inside of a gpg encrypted file whose filename is the title of the website or resource that requires the password. These encrypted files may be organized into meaningful folder hierarchies, copied from computer to computer, and, in general, manipulated using standard command line file management utilities.

pass makes managing these individual password files extremely easy. All passwords live in ~/.password-store, and pass provides some nice commands for adding, editing, generating, and retrieving passwords. It is a very short and simple shell script. It’s capable of temporarily putting passwords on your clipboard and tracking password changes using git.

-

Ever required a table of contents in your Mark Down documents?

DocToc automatically produces a table of contents (Toc) at any position in your document.

Generates table of contents for markdown files inside local git repository. Links are compatible with anchors generated by github or other sites via a command line flag.

-

Grip – GitHub Readme Instant Preview

Render local readme files before sending off to GitHub.

Grip is a command-line server application written in Python that uses the GitHub markdown API to render a local readme file. The styles come directly from GitHub, so you’ll know exactly how it will appear. Changes you make to the Readme will be instantly reflected in the browser without requiring a page refresh.

MotivationSometimes you just want to see the exact readme result before committing and pushing to GitHub.

-

ULAM - Programming language using “artificial life techniques”

ULAM

Just watched this amazing video by David Ackley [Ref 1] showing some “artificial life” demonstrated with a program written in his new programming language MFM.

MFM (Movable Feast) and it’s compiler ULAM has been designed to be infinity scale able. The MFM language breaks down the operation of “computing” into basic points which contain the minimum amount of information and compute (or act) solely by interaction with their nearest neighbors.

Thes can be used to generate stable self replicating patterns of “behaviours” which are intrinsically self healing and self aligning to the task since the “life and death” of processes is built into its natural operation.

David Ackley - Artificial Life for Bigger & Safer Computing

https://www.youtube.com/watch?v=NqSnoJ-VGH4#t=1038.959125Quote :

This codebase implements a simulator; and, hopefully one day

soon, board support for actual hardware for a computer

architecture known as the “Movable Feast Machine” (MFM).The MFM is an indefinitely scalable computer architecture, meaning

that the underlying hardware is organized as a tile that can

be duplicated and plugged together to form an arbitrarily large

machine, without ever running into any a priori design

limit such as running out of addresses.The current version ULAM 2, includes a graphical programming interface.

I think of it as an early 2D version of the programming language used by the mice in “Hitchhickers Guide to the Galaxy”.

For Ubuntu

sudo add-apt-repository ppa:ackley/mfm sudo apt-get update sudo apt-get install ulamRunning the built in Demo “Example Environments”

List of available MFM demos

/usr/bin/mfzrunFrom the demo list : run PredatorPrey

mfzrun PredatorPrey demoRunning the compiler

https://github.com/elenasa/ULAM/wiki/Ulam-Programming-Language

Downloading the demo’s code

git clone https://github.com/elenasa/ULAM.git

David Ackley’s presentation on the philosophy and development of ULAM

David Ackley - Presentation Youtube - Including Ulam Hardware

[Ref 1]

David Ackley

David Ackley is an associate professor of Computer Science at the University of New Mexico, with degrees from Tufts and Carnegie Mellon. Over twenty-five years my work has involved neural networks and machine learning, evolutionary algorithms and artificial life, and biological approaches to security, architecture, and models of computation.@DavidAckley

Over the last 70 years, ever more powerful computers have revolutionized the world, but their common architectural assumptions—of CPU and RAM, and deterministic program execution—are now all hindrances to continued computational growth.Many common networking assumptions—such as fixed-width addresses and globally unique node names—are likewise only finitely scalable.

Life is a self-repairing, space-filling, programmable computing system. For that reason, artificial life principles and software will be central to future large-scale computer architectures. But today, most software ‘alife’ models are siloed in their own unique computational universes, hampering engineering interoperability and scientific generalization.

-

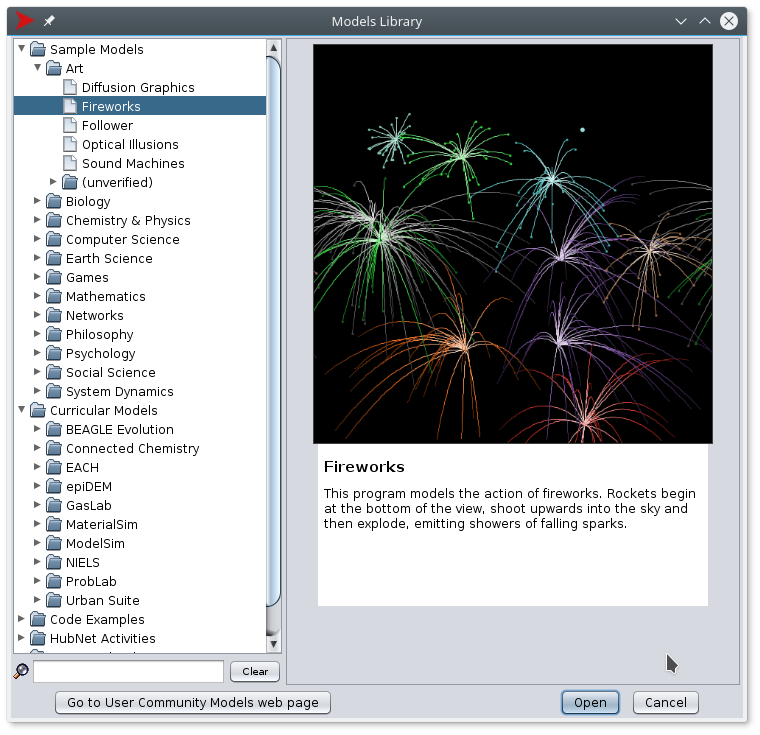

NetLogo

NetLogo is a multi-agent programmable modeling environment. It is open source and written in java so it is cross platform.

It is used by tens of thousands of students, teachers and researchers worldwide. It also powers HubNet participatory simulations. It is authored by Uri Wilensky and developed at the CCL. You can download it free of charge.

http://ccl.northwestern.edu/netlogo/

-

First of all here is an amazing “sketch” from OpenProcessing

http://openprocessing.org/sketch/48672

I’ve never heard of open processing before until I saw a few projects on Github using “Processing” and wondered what it was. It turns out to be so “old” it has sketches that aren’t compatible with the latest Version 3!

Open Processing is a site that hosts “Sketches” made using the graphical processing language, “Processing”.

Open Processing is a website to share Processing sketches

- share your sketches with others

- help and collaborate with the community

- improve and polish your programming skills

- follow classes around the world teaching processing*

Processing is an open source programming language and environment for people who want to program images, animation, and interactions. It is an open project initiated by Ben Fry and Casey Reas. It can be downloaded from processing.org , they ask for a donation but it can be downloaded for free. All the “Sketches” and code snippets on Open Processing are open source.

-

I’ve been interested in A.I. computational techniques since I used them in my PhD in 1984 (Newtownian Regression).

So I have been following the development of open source machine learning tools. One of the most interesting to me as a hacker coder was char-rnn, which allowed you to train a artificial neural net from a text file. This is limited by size of graphics card and it’s specific “Learn to write like Shakespeare demo” of A.I possibilities.

A lot of what comes out depends on training, as the brain is so small. It is very interesting to see what it picks up and what it doesn’t.

Songster : Machine Learning Guitar Tab / Song Writer

Currently Songster has just over the amount of data to learn “English”, learned rudimentary chord key applicability, Some song structure. It is clear from the experiments, up to now, that further increase to the training data would be advantageous.

Also previous brains have been better at the actual Guitar Tabs, especially after I went through and standardized the guitar string description in the training data.

Like us (humans), if you say Sargent Peppers , there isn’t much option but to say Heartclub. However, more data would mean I can lower the probability (sample).

Any ideas of good alternate lyrics, I’m gradually adding extra tabs.

Moving to torch-rnn from char-rnn

The main advantage of moving from char-rnn to torch-rnn has been the size of the brain that is possible. With char-rnn and a R9 290 it was restricted to 400 neurons, but with torch, so far have achieved 1400 neuran brain, with 256 input or layer width.

One of the other advantages of moving to torch-rnn from Songster26 onwards, is it has a “bench sampling” probability.

So it can be a bit more, random over words" but tight on spelling. torch-rnn also allows a bigger brain, which had some differences, but are quite subtle improvements in the Tab structure.

I like retaining the “noise”, to see what it adds to the creative process, i.e. it could be worth adding / experimenting some abc or midi files.

Once I have a lot more training data, we’ll also start it with a bigger brain. It will be interesting to see how much further improvement that gives (to its song “understanding”).

Near future of co-perative or trainable systems

It is now possible to set up a computer as a robot, for instance, I want to create a Robot Drummer. Instead of arms it would play midi.

The robot drummer can be trained by “making it drum to training music” then training it to reduce the difference between a recording of training music plus drumming and the original training music.

The advantage of the robot drummer over a drum machine, will be the flexibility It will also be able to automatically play an appropriate beat, as you jam along. Another advantages it the system could continue to learn, you could teach it your music specifically.

Where to find the source code, torch-rnn

https://github.com/jcjohnson/torch-rnn

What next?

I have a number of sample outputs yet to study (39 to 44). So far, it seems like you get a couple of stonkin, if complex tunes per 500kb sample and full probability. Bit like hyper Yes or REM on steroids.

Update : A few musicians have now approached me and I have allocated them a specific out-put sample, from Songster, for inspiration / study.

I have done some work to improve the training data, sharpen out “noise” and add some more tabs, could do with a bit more before rebuilding the PCs for some more training runs.

Here’s a couple of Songster27s song Tabs, from sample 38.

The Bridge by Slach Para loud and the Pharacancy scabins pop key A E A E F#m E A D A D He's a battle light away from the machined will bring it G D E talking tided of the precious of your painting sticking E E G B No one caracope like the dictaked little cops C3 G Flashing crosses at the chance it was you A E Birthday lasty F# B7 D A Badd1oo-ooo-ooo, he's with a sil-s? BRIDGE F#m The women really understands, F#m F# Bm When the jeat dark as a crowd a-dur-da-dor F#m C#m7 Get it again, they call me the bright D Bm E Blinded by the light she ran, unhibleand also from Songster27.38 without a title :

Everything we did was tries by Songster27 slow ballad lament key C C Gm7 F F everything we did was tries it a stand now .. Dm7 F Em Dm7 C Fmaj7 C F7 time all to be to be Am Em7 F B7/D# But honey for the steal, I was wrong at your lover Fmaj7 Let my feels beach one selves has go. (Repeat and fade) -

Something I’ve been waiting to find, to have an open source solution to Task and Request tracking. RT also covers inventory, email analysis and auto job creation.

RequestTracker (RT)

RT is an enterprise-grade issue tracking system. It allows organizations

to keep track of what needs to get done, who is working on which tasks,

what’s already been done, and when tasks were (or weren’t) completed.RT doesn’t cost anything to use, no matter how much you use it; it is

freely available under the terms of Version 2 of the GNU General Public

License.RT is commercially-supported software. To purchase support, training,

custom development, or professional services, please get in touch with

us at sales@bestpractical.com.Install on Ubuntu

sudo apt install request-tracker4 -

Peer to Peer software development

One thing about blockchain technology is how it is “ready to go”, unlike most other technologies, early adopters, alt-coins have quickly demonstrated how it can be used.

Now it seems every good peer to peer idea I look have has a 2 year old initial implementation.

The DMCA takedowns of Github produced some very interesting developments which are relevant today, especially with tracking of IP addresses by the state.

Gitchain Decentralized P2P Git Network

“Git is a free and open source distributed version control system designed to handle everything from small to very large projects with speed and efficiency.”

Gitchain is an application of ideas behind Bitcoin, Namecoin and DHT applied to Git hosting. Once you install it, it acts as a local proxy to the entire Gitchain P2P network.

http://gitchain.org/https://github.com/gitchain/gitchain

GitTorrent: A Decentralized GitHub — May 29, 2015

Chris Ball says :

I’ve been working on building a decentralized GitHub, and I’d like to talk about what this means and why it matters — and more importantly, show you how it can be done and real GitTorrent code I’ve implemented so far.

Why a decentralized GitHub?

First, the practical reasons: GitHub might become untrustworthy, get hacked — or get DDOS’d by China, as happened while I was working on this project! I know GitHub seems to be doing many things right at the moment, but there often comes a point at which companies that have raised $100M in Venture Capital funding start making decisions that their users would strongly prefer them not to.

http://blog.printf.net/articles/2015/05/29/announcing-gittorrent-a-decentralized-github/

https://github.com/cjb/GitTorrent

Also, a decentralized, distributed package manager:

-

Diffoscope

When auditing or merging open source software it is often necessary to do some sort of difference between the projects. This is where tools such as diff or diffoscope come in.

diffoscope is developed as part of the “reproducible builds” Debian project and was formerly known as “debbindiff”

diffoscope can intelligently identify the difference between files, by knowing and comparing files within their formats. It’s available in most repositories.

It recursively unpacks archives of many kinds and transform various binary formats into more human readable form to compare them. It can compare two tarballs, ISO images, or PDF just as easily.

diffoscope can be scripted through error codes, and a report can be produced with the detected differences. The report can be text or HTML. When no type of report has been selected, diffoscope defaults to write a text report on the standard output.

http://manpages.ubuntu.com/manpages/xenial/man1/diffoscope.1.html

-

I was looking for fractal generators for Linux (test my PC speed before meltdown patch) and came across this 3D generator I haven’t seen before :

What is Mandelbulber?

Mandelbulber is an experimental application that helps to make rendering 3D Mandelbrot fractals much more accessible. A few of the supported 3D fractals: Mandelbulb, Mandelbox, BulbBox, JuliaBulb, Menger Sponge, Quaternion, Trigonometric, Hypercomplex, and Iterated Function Systems (IFS). All of these can be combined into infinite variations with the ability to hybridize different formulas together.

-

looks nice…

-

I was looking something to benchmark my PC before meltdown gets fixed and came across this : stress-ng.

It contains over 180 stress tests that allow one to measure throughput on various system components, from memory, cache, CPU, system calls, network, etc. Install on Ubuntu with:

sudo apt-get install stress-ngThere is a --metrics-brief option that reports the throughput in terms of bogo ops per second. One can get a full set of deep CPU and system metrics using the --perf option. There are many types of stressors that can be run sequentially or in parallel on 1 or more CPUs, for example:

stress-ng --metrics-brief --cpu 2 -t 1mthis will exercise the CPU for 1 minute with 2 CPU stressor instances running in parallel.

-

Just when I get an Intel PC for the first time, it turns out their speed increase over AMD is due to and insecure processors’ out-of-order execution to read arbitrary kernel-memory location.

Spectre and Meltdown are side-channel attacks which deduce the contents of a memory location which should not normally be accessible by using timing to observe whether another, accessible, location is present in the cache.

These caches can include personal data and passwords.

Test your Linux system is patched for Meltdown (or not) Spectre . : https://github.com/speed47/spectre-meltdown-checker